Using Simulated Data with ChatGPT to Improve your Marketing Analysis

Marketers that can learn to code (just a little bit) will have superpowers

At Forrester B2B in June 2023, founder and CEO of Forrester Research George F. Colony said: “ChatGPT has arrived and Google is dead - you better be prepared.” (paraphrased)

Not sure I agree with the Google bit, although Search is definitely undergoing a massive transformation and I see how Google’s ad business is at risk. Nonetheless I appreciated the warning shot because I think marketers need to wake up to the gravity of the situation.

In my view, the most disruptive thing ChatGPT is going to do for companies is democratize the ability for non-coders to acquire basic coding skills and 10x their productivity. This means Marketers that can learn to code (just a little bit) will have superpowers.

If you are a marketer in a SaaS company, most likely you aren’t writing code to automate your workflows, there’s just too much on your plate to pick up that skillset. And not everyone has an affinity to writing code. Yet so much of the job of a marketing and business leader involves data, and if you look at any job posting it will require the person is “data driven.”

What does it mean to be “data driven” today? It means (1) being a glutton for punishment, and (2) being able to do the data dance. You know what I mean with the data dance — I’m talking about the data exports, pivot tables, and integrations between Salesforce, Tableau, Google Adwords, your data warehouse, that new tool your analytics team just onboarded (what the hell is that thing called again?) and more one-off tools than you can count. Despite how annoying it can be, there’s arguably no skill more important than learning how to find business insights to ultimately improve your GTM and drive marketing results.

Enter: Generative AI

Generative AI offers a plethora of use-cases for marketers, and in this post we will look at the Data Analysis use-case and some simple code generation. For completeness, here are some of the use-cases I’ve been playing around with so far.

Improving landing pages

Building tighter copy

Competitive Intelligence

Event planning

Market sizing exercises

Re-purposing or re-factoring content

Data analysis

(Note: In my own personal capacity, I have paid plans with both OpenAI and Jasper AI to experiment with the above use-cases and try to learn as much as possible)

While ChatGPT offers significant time savings for marketers, one of the current challenges is that organizations must bring their own large language model (LLM) in-house before fully realizing the value of their own corpus of data. For example, to have an awesome chatbot that is powered by an LLM you need historical data on your support tickets (data to train the model), you need the model itself, and you need the application layer the Support Team will actually use.

With the exception of a few vertical solutions, the industry isn’t mature enough for most companies to roll their own LLM and build an end-to-end internal application, although within 6-12 months it will most likely be there. This is why you are seeing an arms race for data infrastructure companies to go buy the IP to build out their stack, like the $1.3B deal for Databricks deal to buy MosaicML.

Currently, if you are a marketer doing anything beyond the most basic use-cases, publicly available LLM’s should be fire-walled from your company for security purposes. This means that if you are working on data problems, it’s hard to get started with a representative dataset to run an example analysis. (IMPORTANT: you should never use your company data or put any sensitive information in ChatGPT.)

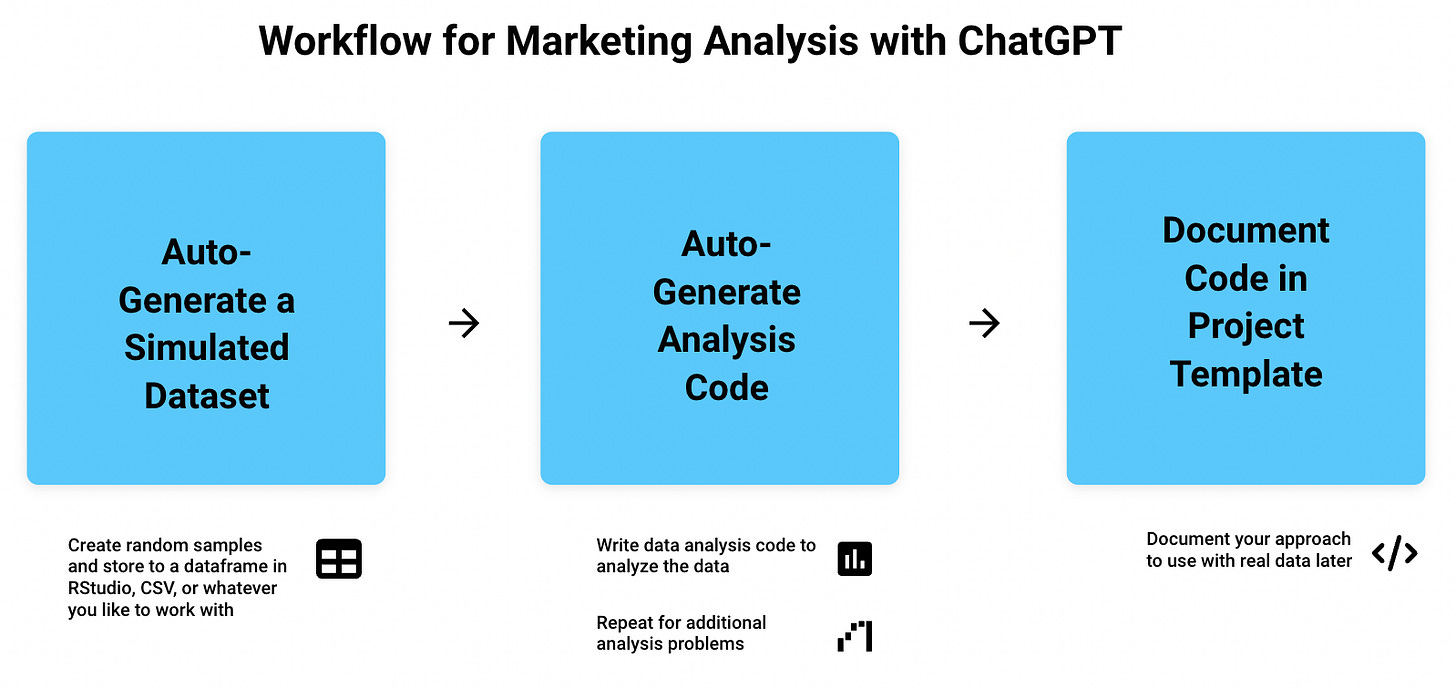

Instead, what you can do is use ChatGPT to build a simulated dataset and then practice building prompts and analysis that are being driven by 100% randomized data. Once you have a sample dataset, that can jumpstart your exploration to help write code for data analysis. Furthermore, these simple “simulations” can be brought behind your firewall and deployed in your own environment, without ever sharing your data with ChatGPT. Example workflow below:

Here’s an example of the very first step in the process - using ChatGPT to create a simulated dataset. Let’s take a made-up example (that is admittedly quite meta). Assume we work at a company and sell Generative AI software to Fortune 500 companies. By the way, this will be fun to see if OpenAI even let’s me proceed with this prompt or returns some type of snarky answer :)

In our example, the company sells to HR, Customer Support, or Sales departments. Using a basic prompt, I can have ChatGPT create a randomized sample of Opportunity data, to then feed into my example analysis in a future step. (This would be similar to an SFDC report or some other CRM report to understand your pipeline)

Prompt for ChatGPT

Let's assume I work for a software company that sells Generative AI tools to Fortune 500 companies, that competes with OpenAI. (if this hits too close to home...my apologies) Create an R script that stores 100 samples to a dataframe called "opportunities".

The script should first generate a function called "Create New Opportunities" and then it should call the function and assign the output to the dataframe called "opportunities".

1. Generate 100 randomized samples (or rows) in the dataframe.

2. The dataframe should have the following column names:

a. Company Name - where the company name is randomly chosen from the Fortune 500 list. (I can't provide you with a list, so just use 20 representative company names based in the US)

b. Opportunity Name - where the opportunity name starts with 1 of 3 hyperscalers (AWS, Azure, or GCP), and can be 1 of 3 types of opportunities based on end user department (HR, Tech Support, or Sales) with this naming convention "AWS - HR", "Azure - Tech Support", "GCP - Sales".

c. Opportunity Size (MRR) - $5000, $20000, or $50000

d. Active Trial - yes / no

e. Opp Creation Date - should be DD-MM-YYYY format sometime after 01-01-2023

f. Stage - Use one of the following: Qualification, POC, Negotiation, Closed Won, Closed Lost

Code Generated

# Install and load necessary packages

library(dplyr)

library(stringr)

# Define the function to create new opportunities

CreateNewOpportunities <- function(num_samples) {

# List of Fortune 500 company names (based on US companies)

company_names <- c("Walmart", "Amazon", "Exxon Mobil", "Berkshire Hathaway", "Apple",

"UnitedHealth Group", "McKesson", "CVS Health", "AmerisourceBergen",

"Chevron", "Ford Motor", "General Electric", "AT&T", "General Motors",

"Verizon Communications", "Cardinal Health", "Costco Wholesale",

"Kroger", "Amazon", "Walgreens Boots Alliance")

# Vector of hyperscalers

hyperscalers <- c("AWS", "Azure", "GCP")

# Vector of opportunity types

opportunity_types <- c("HR", "Tech Support", "Sales")

# Vector of opportunity sizes

opportunity_sizes <- c("$5000", "$20000", "$50000")

# Vector of active trial status

active_trials <- c("yes", "no")

# Create a dataframe to store opportunities

opportunities <- data.frame(Company_Name = character(),

Opportunity_Name = character(),

Opportunity_Size = character(),

Active_Trial = character(),

Opp_Creation_Date = character(),

Stage = character(),

stringsAsFactors = FALSE)

# Generate random samples

for (i in 1:num_samples) {

# Randomly choose a company name

company_name <- sample(company_names, 1)

# Randomly choose a hyperscaler and opportunity type

hyperscaler <- sample(hyperscalers, 1)

opportunity_type <- sample(opportunity_types, 1)

# Create the opportunity name

opportunity_name <- paste(hyperscaler, "-", opportunity_type)

# Randomly choose an opportunity size

opportunity_size <- sample(opportunity_sizes, 1)

# Randomly choose an active trial status

active_trial <- sample(active_trials, 1)

# Generate a random date after 01-01-2023

start_date <- as.Date("2023-01-01")

end_date <- Sys.Date()

opp_creation_date <- sample(as.integer(start_date):as.integer(end_date), 1)

opp_creation_date <- as.Date(opp_creation_date, origin = "1970-01-01")

# Randomly choose a stage

stages <- c("Qualification", "POC", "Negotiation", "Closed Won", "Closed Lost")

stage <- sample(stages, 1)

# Add the opportunity to the dataframe

opportunities <- opportunities %>%

add_row(Company_Name = company_name,

Opportunity_Name = opportunity_name,

Opportunity_Size = opportunity_size,

Active_Trial = active_trial,

Opp_Creation_Date = format(opp_creation_date, "%d-%m-%Y"),

Stage = stage)

}

return(opportunities)

}

# Call the function to create new opportunities and assign the output to the dataframe

opportunities <- CreateNewOpportunities(100)

# Print the dataframe

print(opportunities)

As typically happens, I had to prompt ChatGPT 3-4 times after the initial code generation to correct minor coding errors. In a future experiment, I’ll run this test in Github Copilot and compare the user experience.

Result in RStudio by Posit

There you have it! That’s just step one in the data analysis process. If you are a marketer experimenting with ChatGPT, please connect with me so I can follow your content and share ideas.